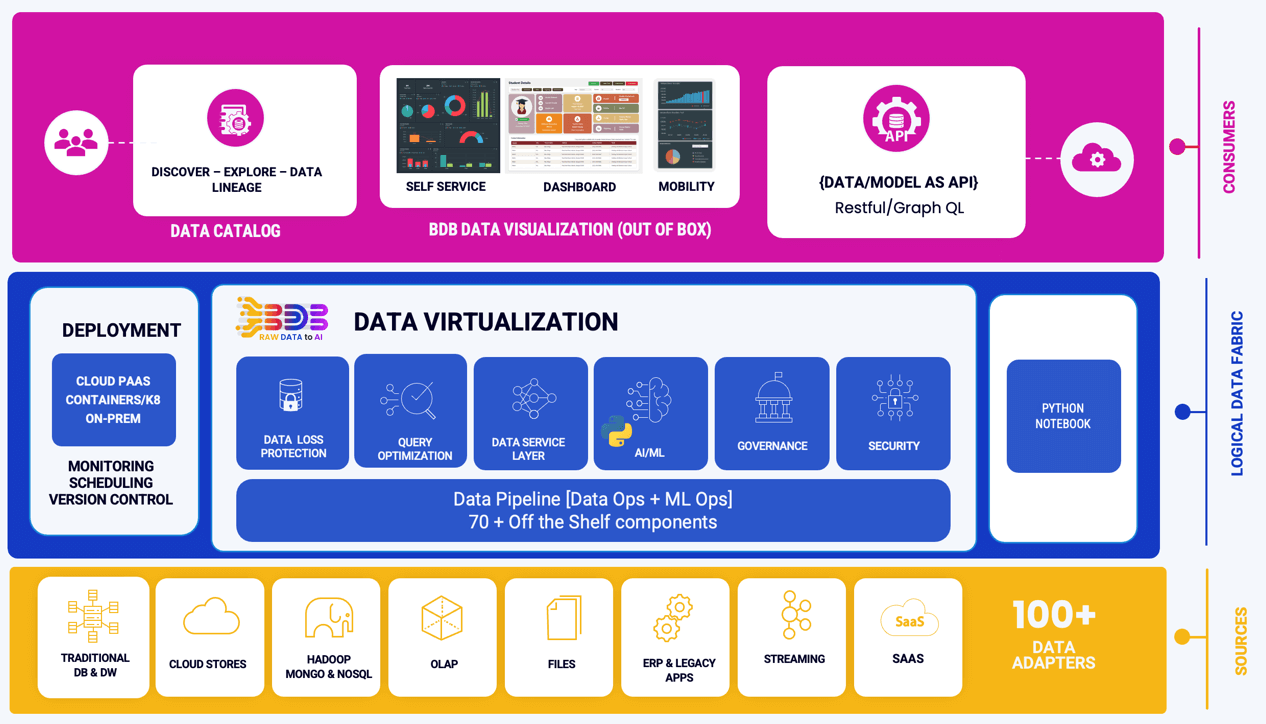

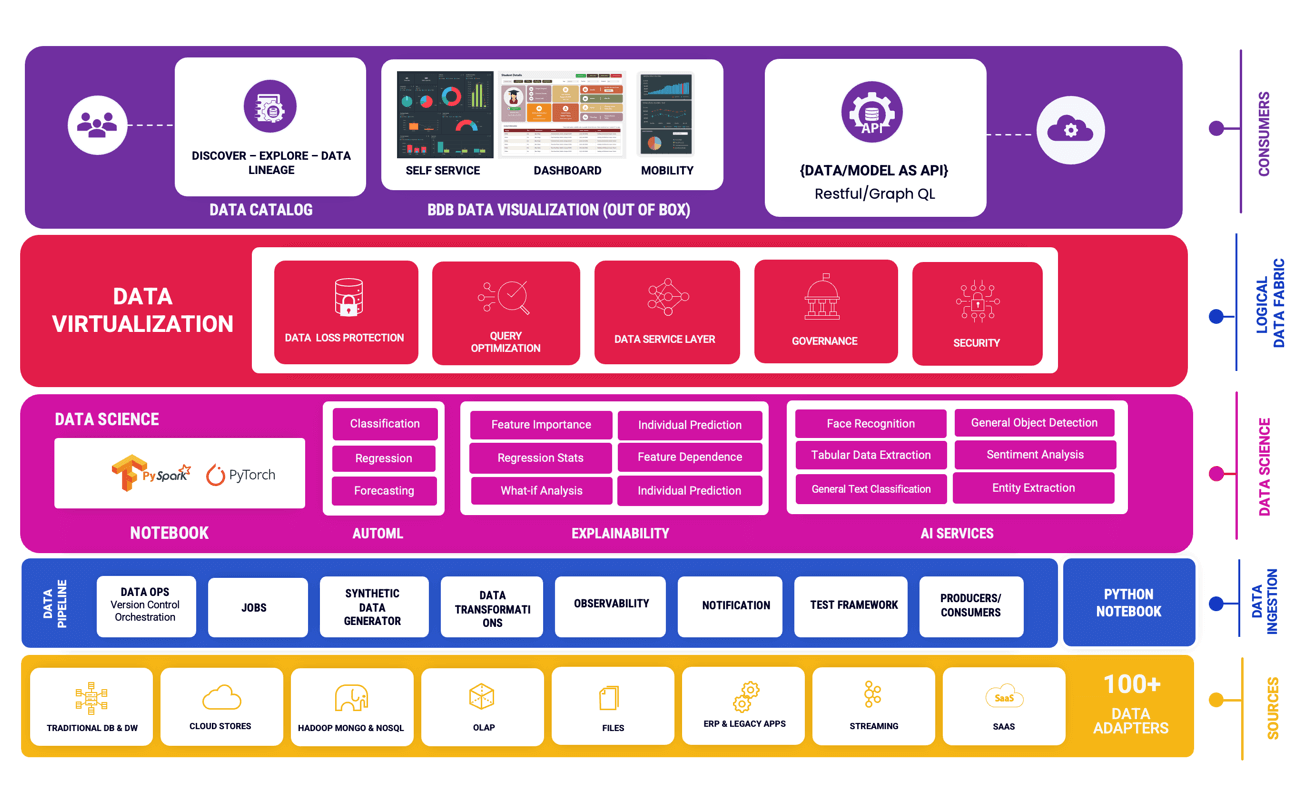

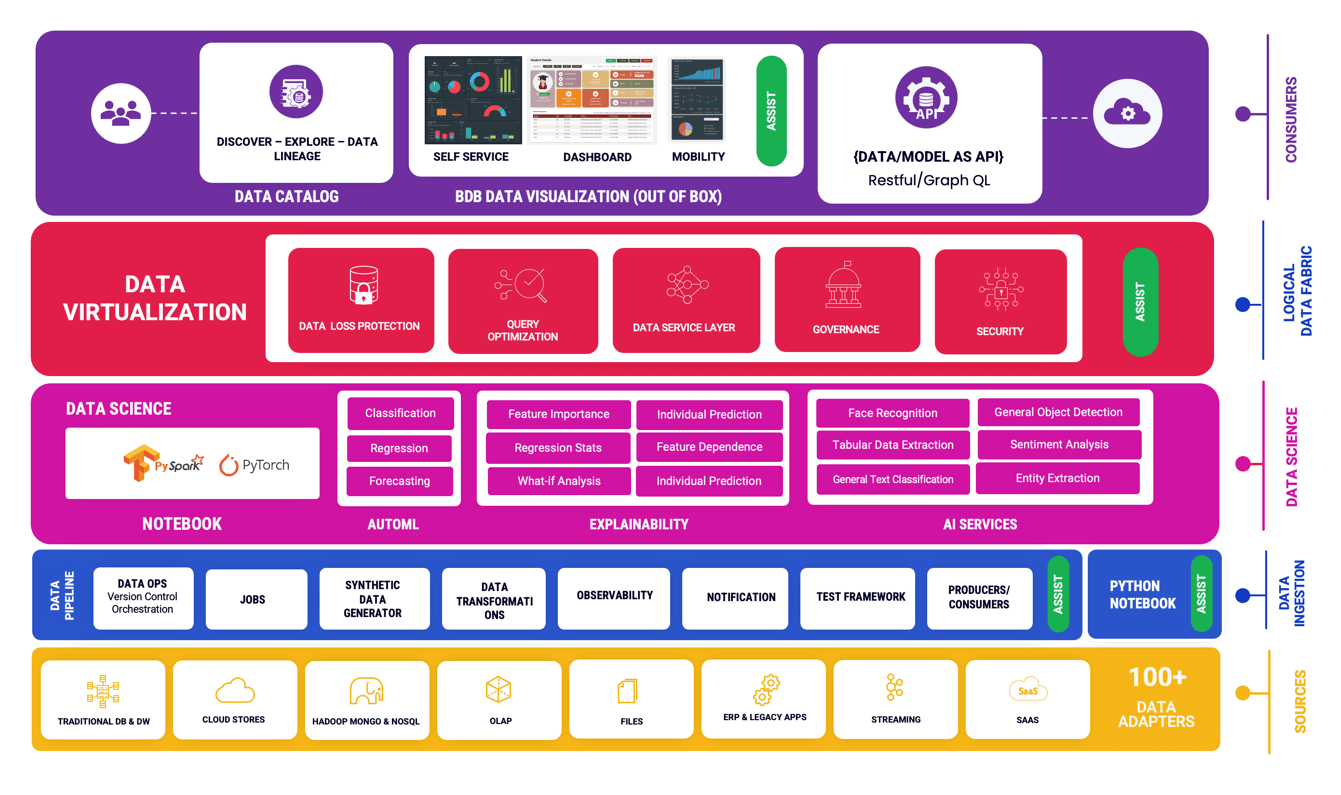

BDB is a comprehensive end-to-end platform that addresses all four facets of contemporary data analytics. It seamlessly installs on

any cloud or on-premises infrastructure and efficiently connects with diverse databases, functioning as a cost-effective data lake solution.

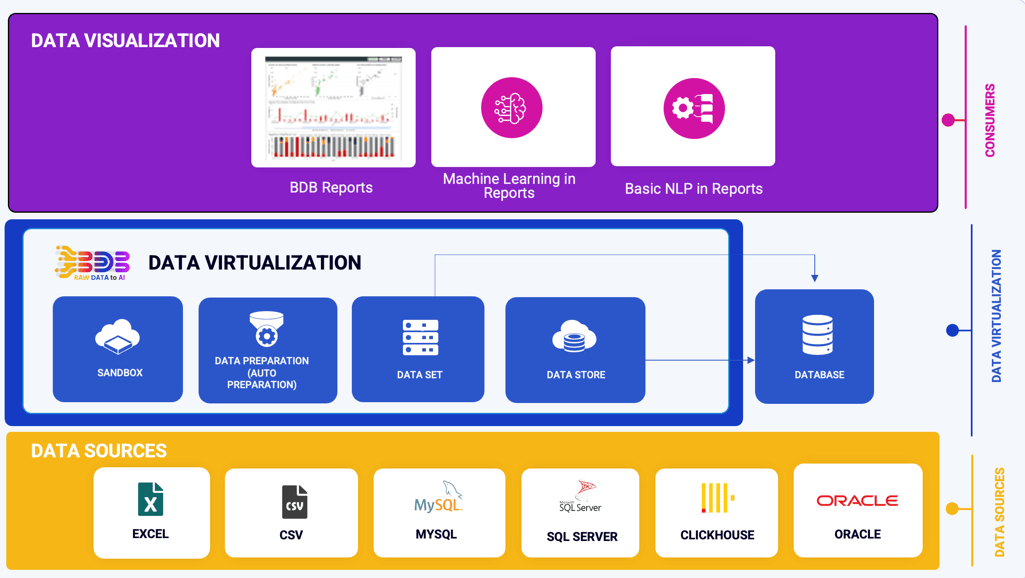

BDB is a comprehensive end-to-end platform that addresses all four facets of contemporary data analytics. It seamlessly installs on any cloud or on-premises infrastructure and efficiently connects with diverse databases, functioning as a cost-effective data lake solution.

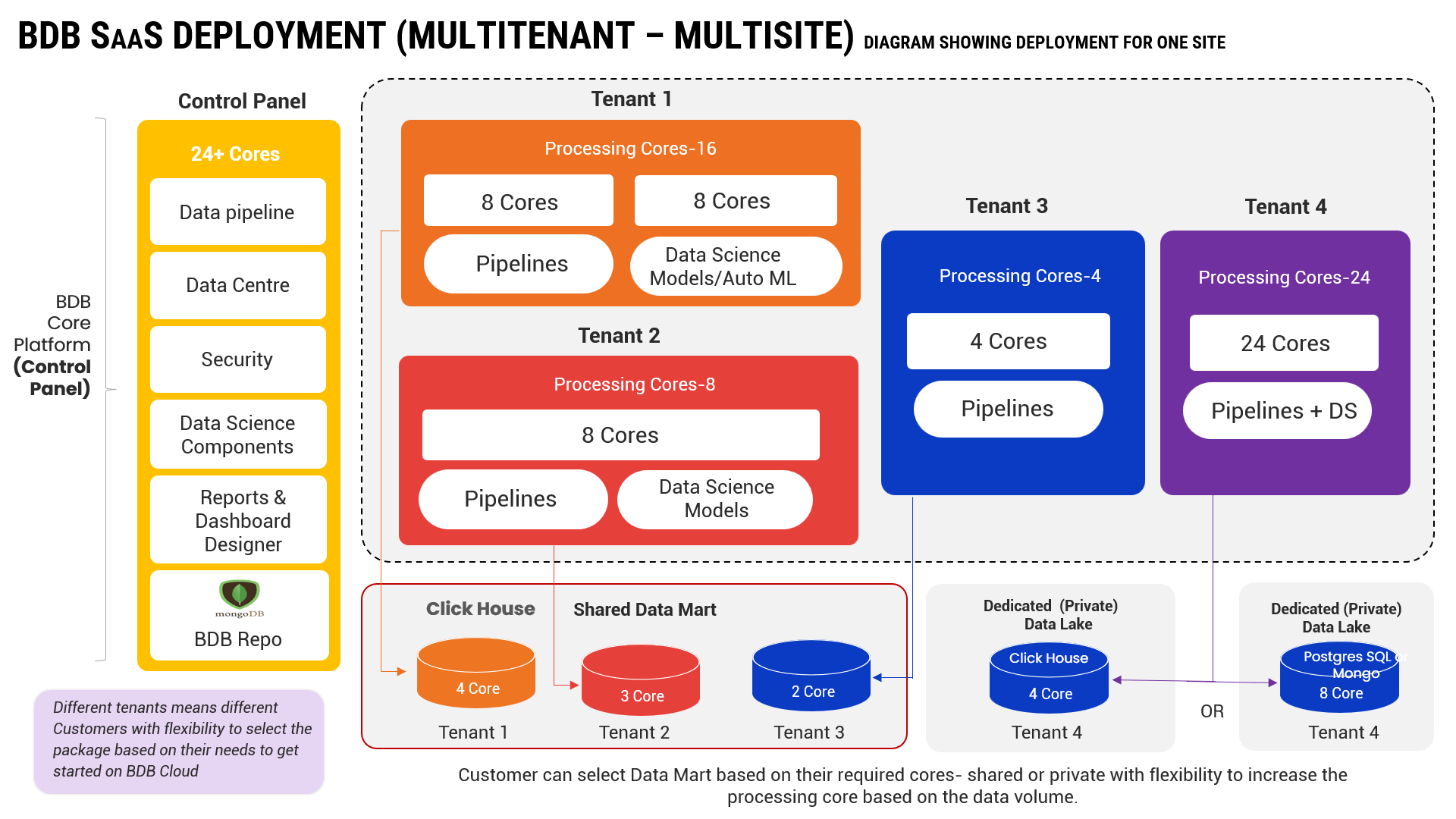

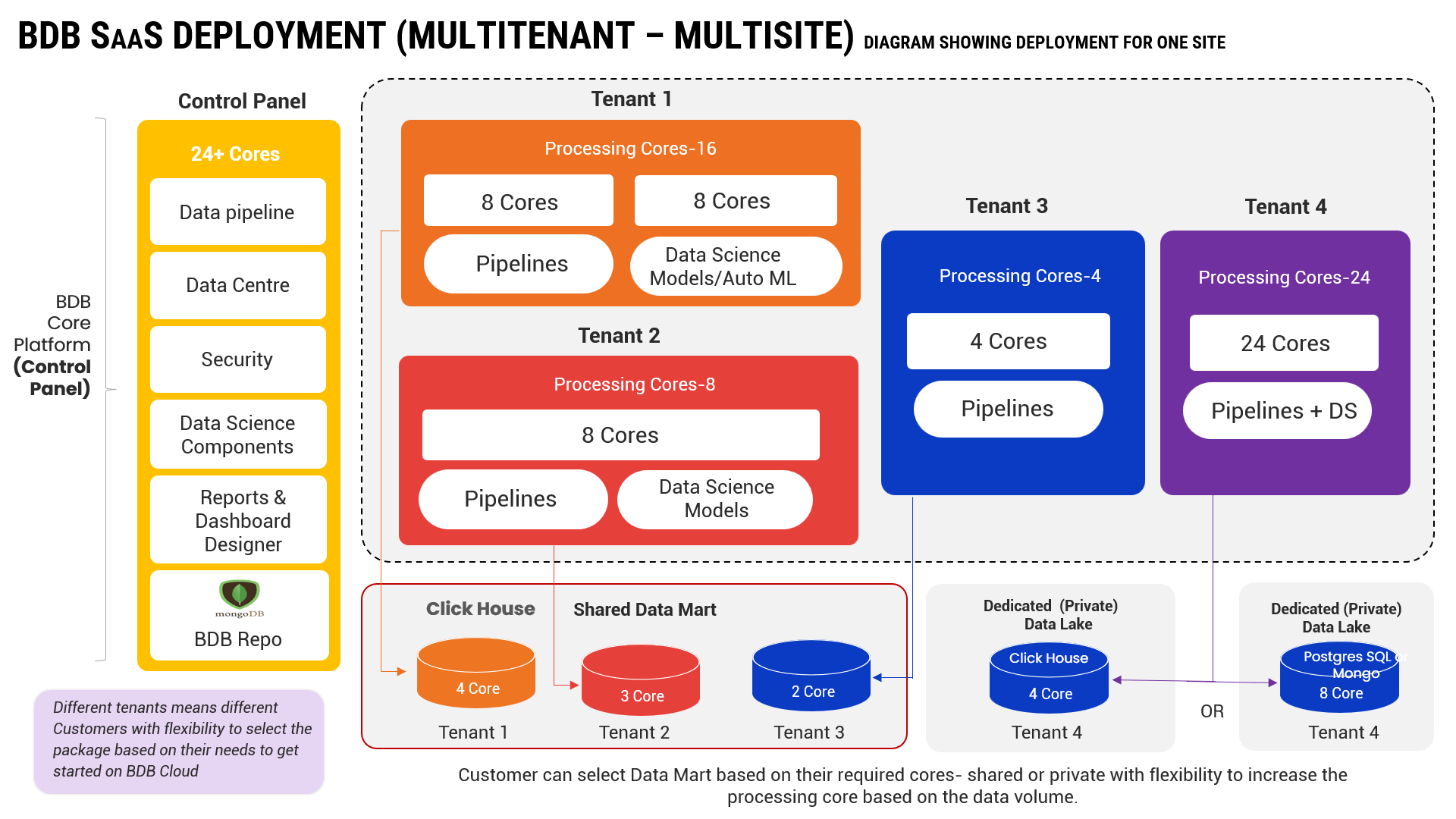

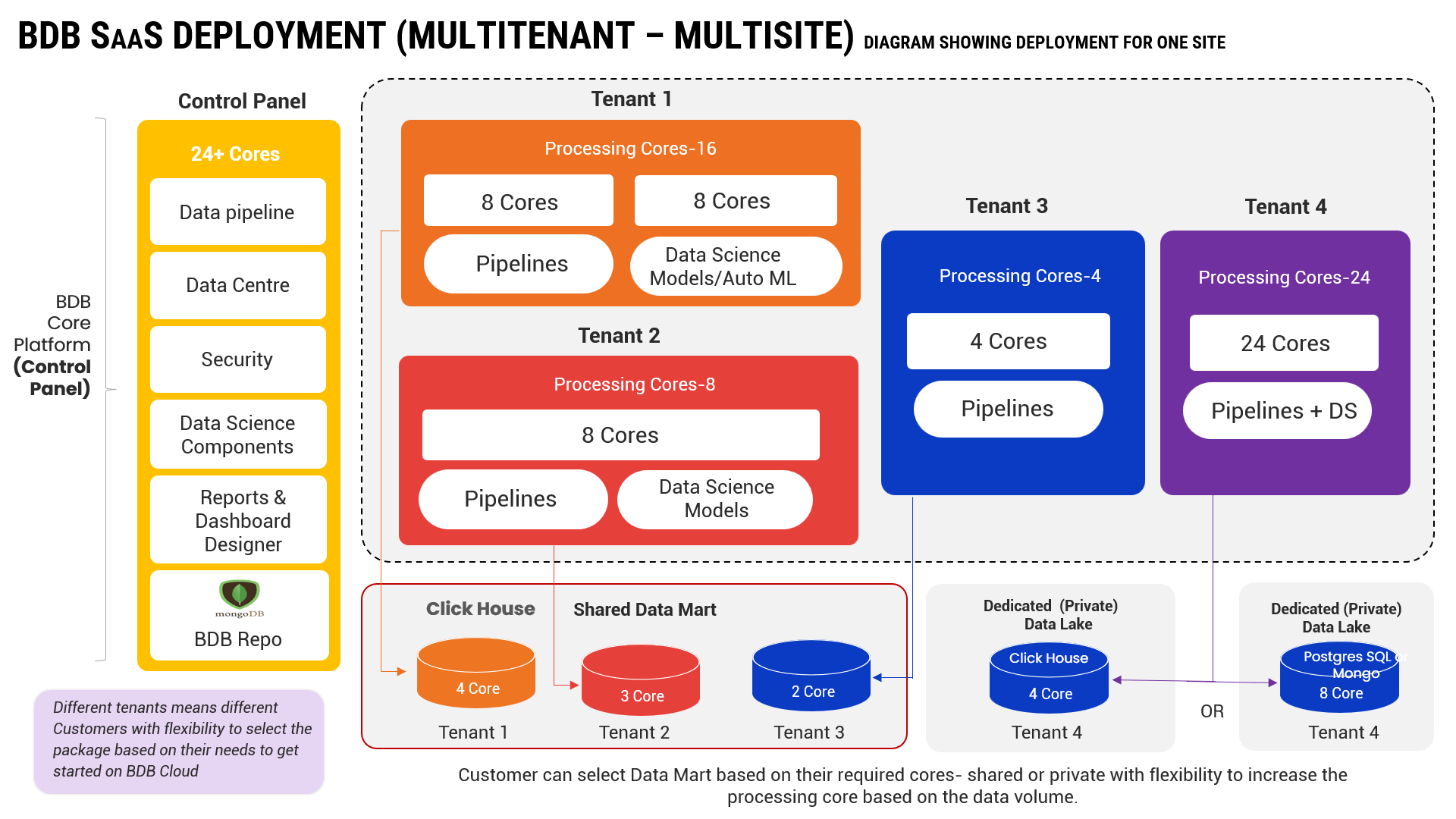

Get a rapid entry into analytics, featuring multisite, multitenant deployment on the leading cloud provider,

ensuring robust security measures.

This includes Sandbox, Data Preparation module, Data Virtualisation and self service reports with feature of ML in Browser

To seamlessly integrate your desktop and local data into the BDB platform, leverage the Sandbox or choose from the array of connectors available within the platform. Employ the Data Preparation tools to efficiently clean and refine your data. Establish a robust Data Store, often referred to as the BDB Cube, to organize and optimize your data for analysis. Within a matter of minutes, visualize your refined data using the platform's intuitive features.

Data Visualization Segment

Identify and eliminate anomalous records effortlessly, along with purging unwanted datasets using smart Machine-Learning techniques and sampling. Apply modifications to datasets and export analysis-ready data with just a few clicks.

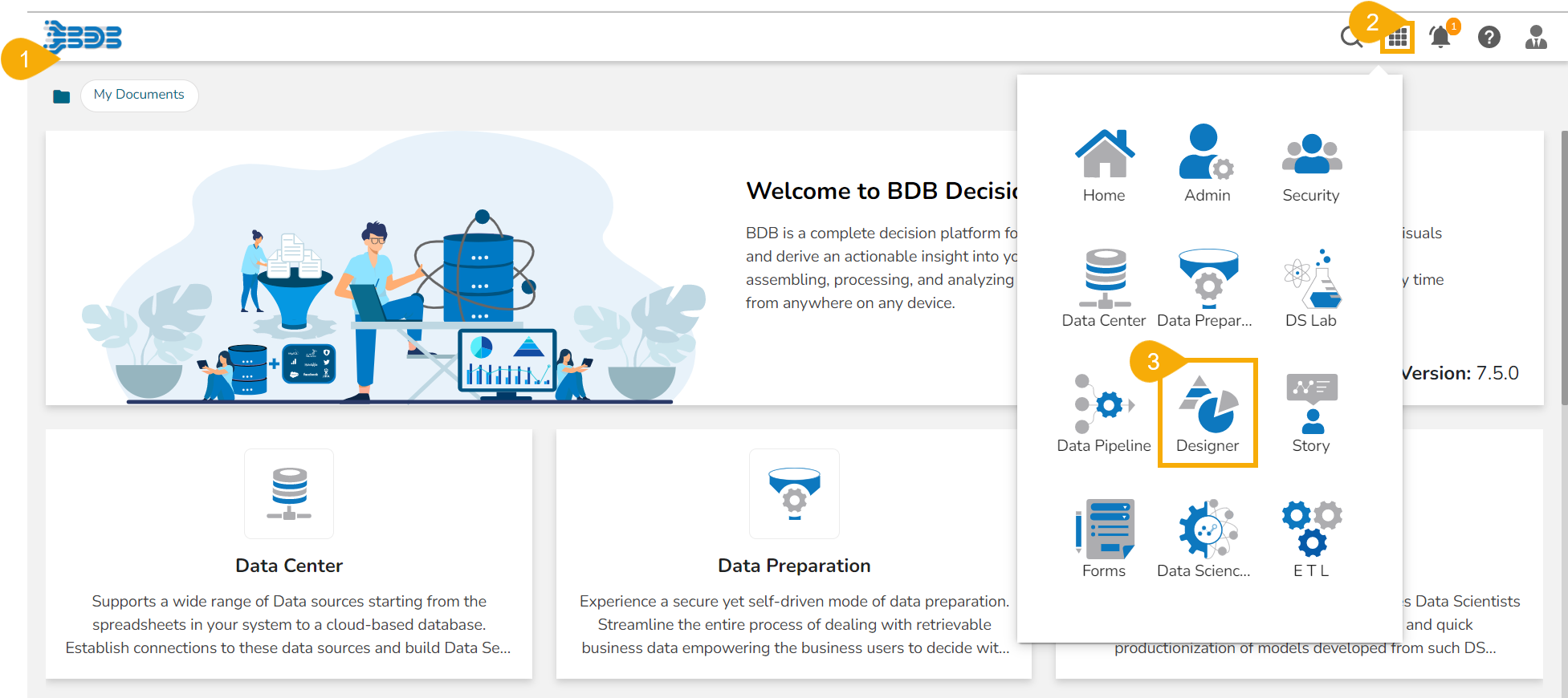

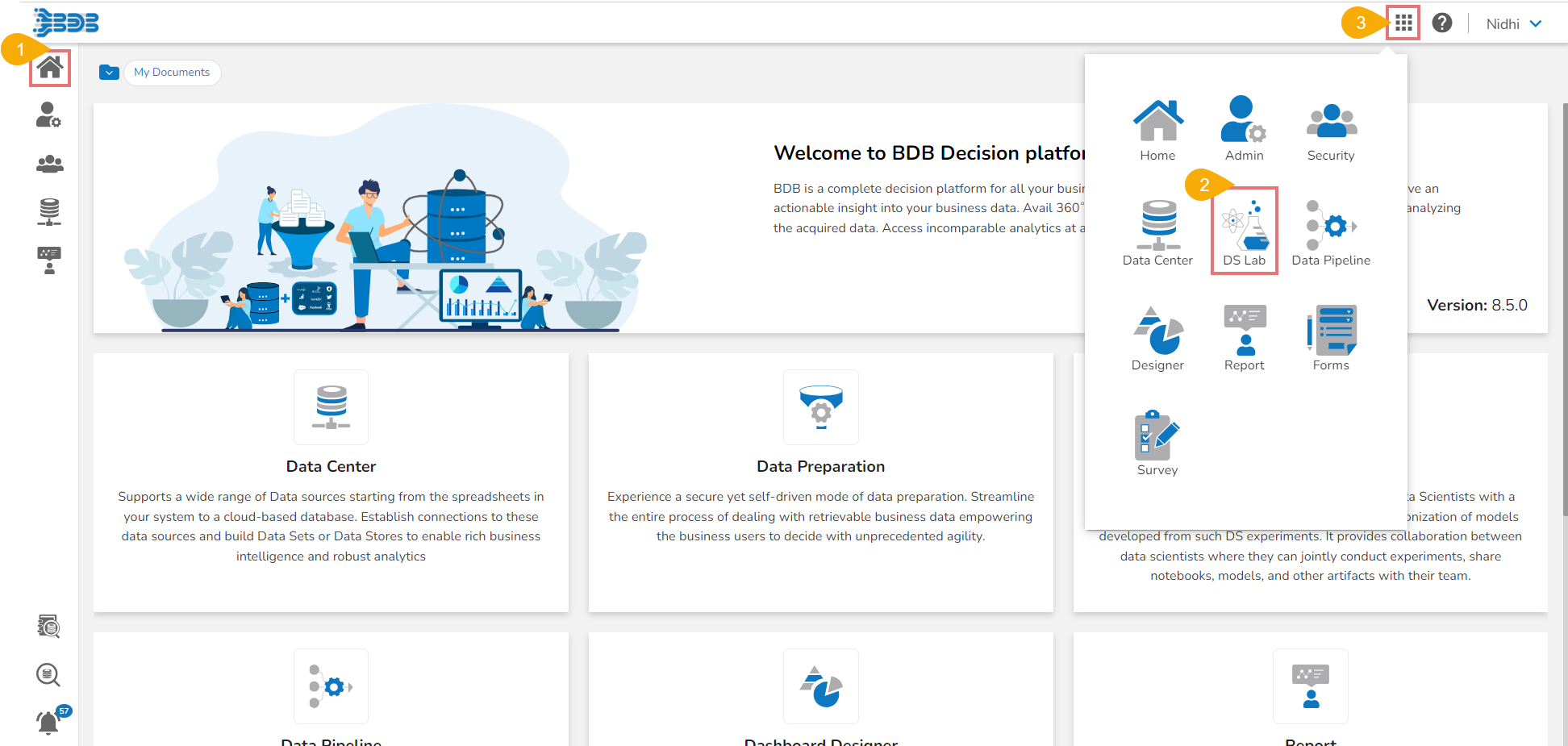

Seamlessly unlock the Data Preparation feature through two convenient pathways:

Whether you're refining datasets or optimizing data for peak performance, our user-friendly interface puts essential tools at your fingertips.

Ensure your data is consistently prepared and ready for action with our intuitive access options.

This include Jobs, Data Pipeline module, Notebooks and seamless API Integration

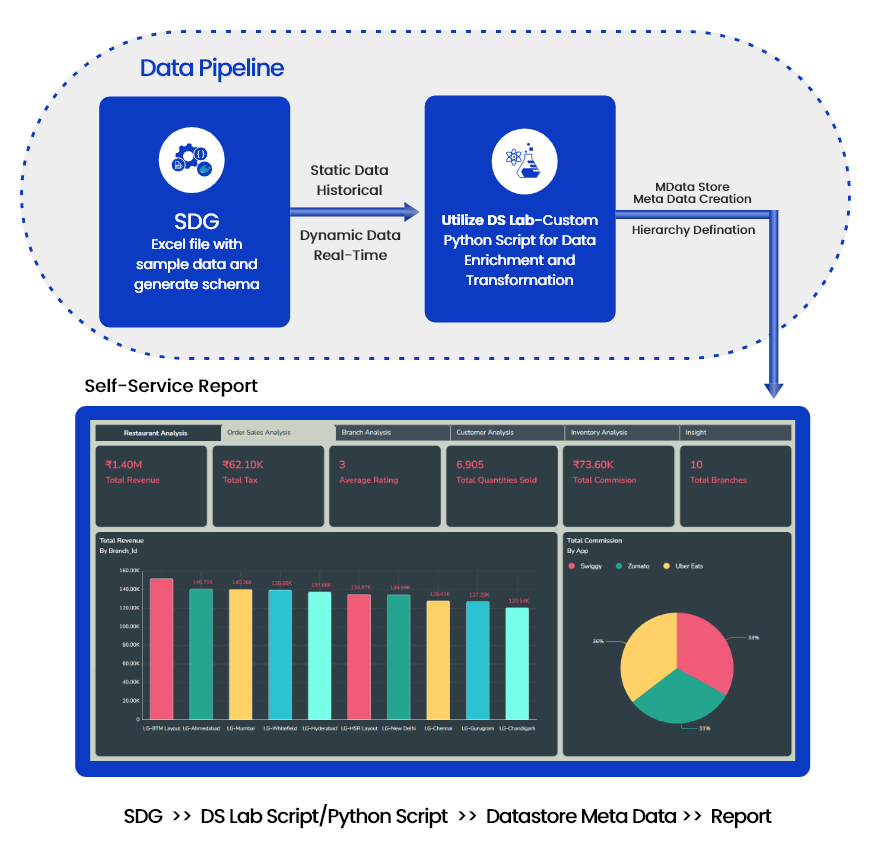

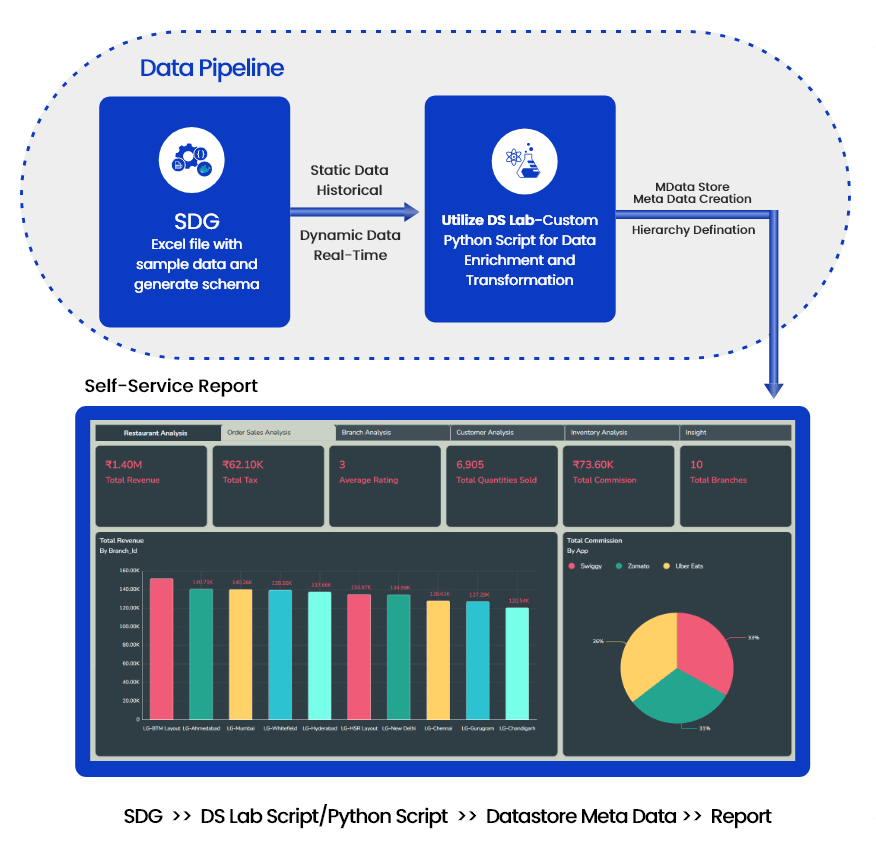

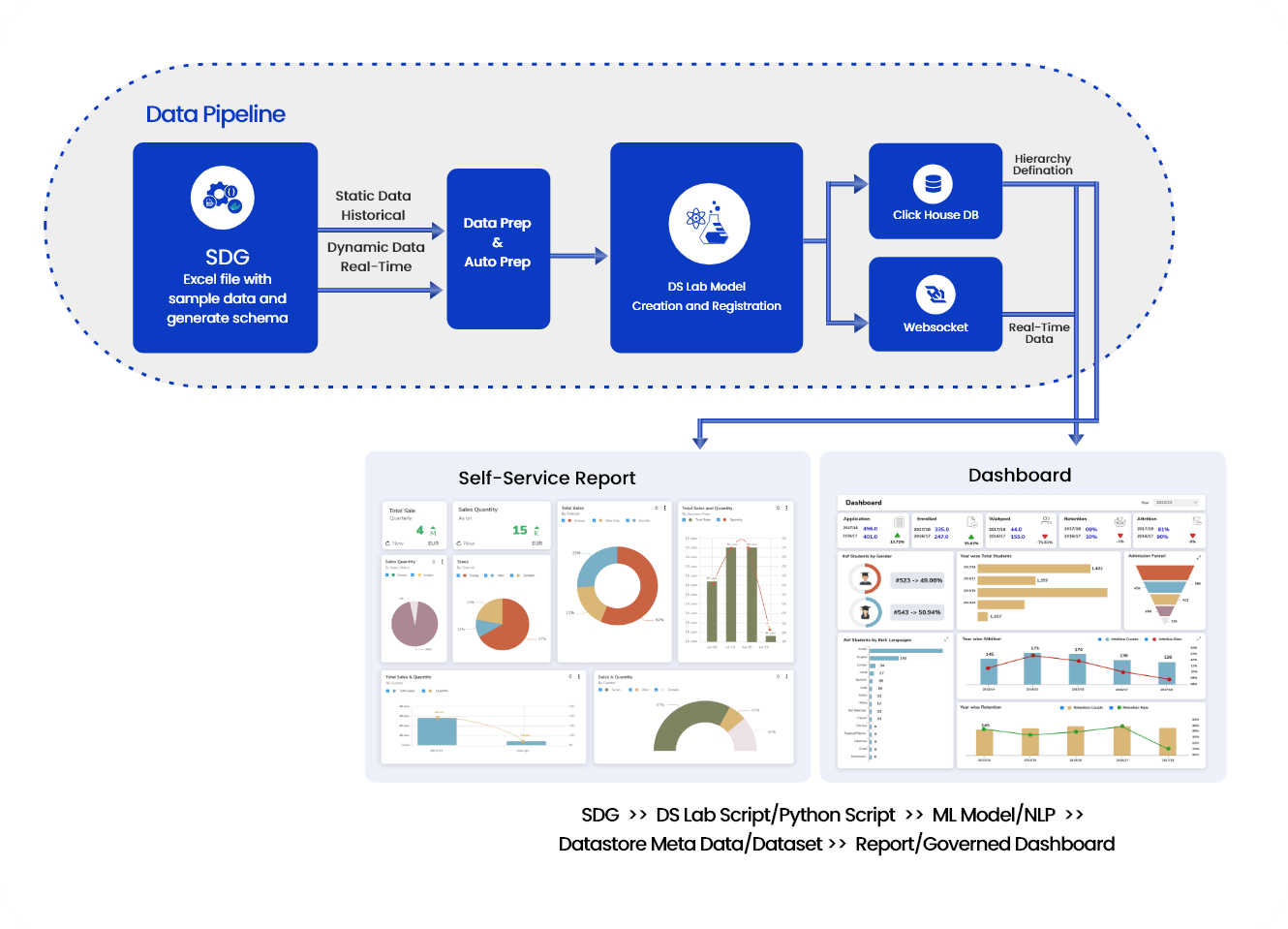

Ingest data (real time, Batch, Micro batch) from your multiple sources of data, enrich it, transform it (via Python code or Data Preparation tool of BDB), push this data into any data lake of your choice or BDB Data stores and Visualise the data in Self Service Reports or Governed Dashboards.

Documentation Page

A data pipeline is a structured series of real-time operations that transport, modify, and manage data from diverse sources to a specific destination. This orchestrated real-time data flow is vital for organizations to harness their data effectively, making it accessible and actionable. A well-constructed data pipeline ensures real-time data is collected, cleaned, transformed, and loaded consistently and efficiently, laying the foundation for reliable analytics and decision-making.

Spark jobs process data using the Spark framework, extracting data from various sources, transforming it to the desired format, and loading the modified data into a target system or data warehouse.

This include AutoML, utilities, projects, MLOps, and computer vision capabilities.

(All Features except GenAI Comes into this Package)

Ingest data (real time, Batch, Micro batch) from your multiple sources of data, enrich it, transform it (via Python code or Data Preparation tool of BDB), pass it through Multiple Models & push this data into any data lake of your choice or BDB Data stores and Visualise the data in Self Service Reports or Governed Dashboards. One can use Auto ML or library of Algorithms to build and deploy their models quickly in the platform.

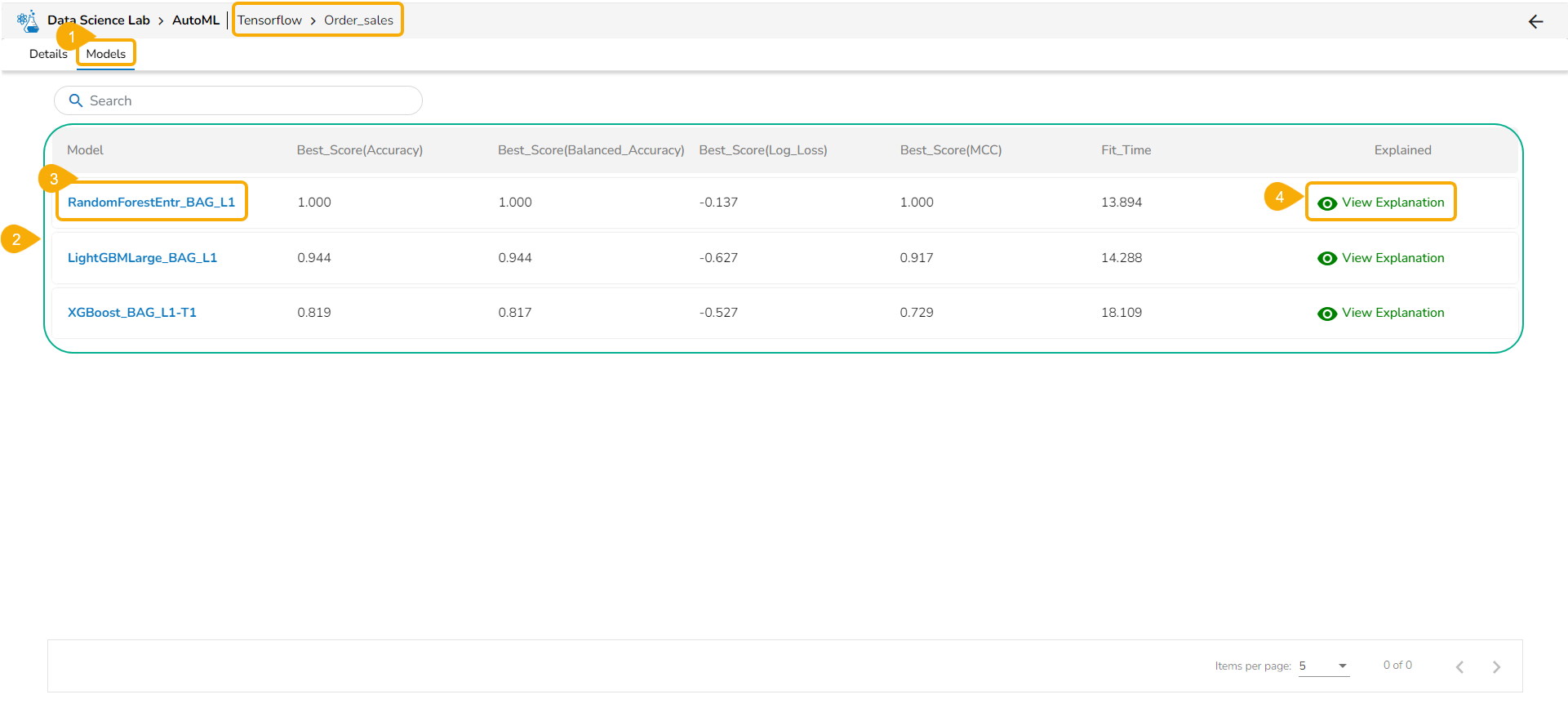

The View Explanation option will redirect the user to the below given options. Let us see all of them one by one explained as separate topics.

The user can publish a DSL model as an API using the Model tab. Only the published models get this option.

Enable LLMs with Assist across all modules, featuring a user-friendly chat interface.

Assist has been developed and given inside BDB platform to help developers create contents in a fast manner. This package has End to End Platform features (Data Science Package) therefore it allocates higher no of Cores already.